BUSIFusion: Blind unsupervised single image fusion of hyperspectral and rgb images

IEEE Transaction of Computational Imaging

Published on February 10, 2023 by J. Li, Y. Li, C. Wang, X. Ye, and W. Heidrich

Unsupervised Image Fusion Blind Fusion Hyperspectral Image Fusion

1 min READ

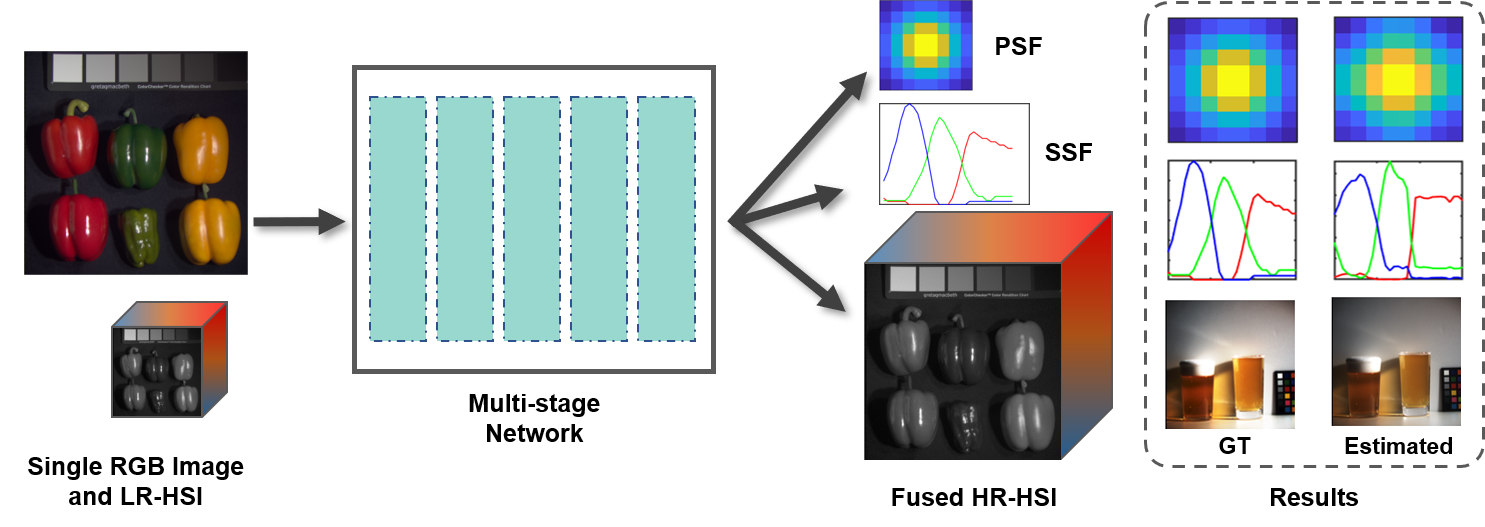

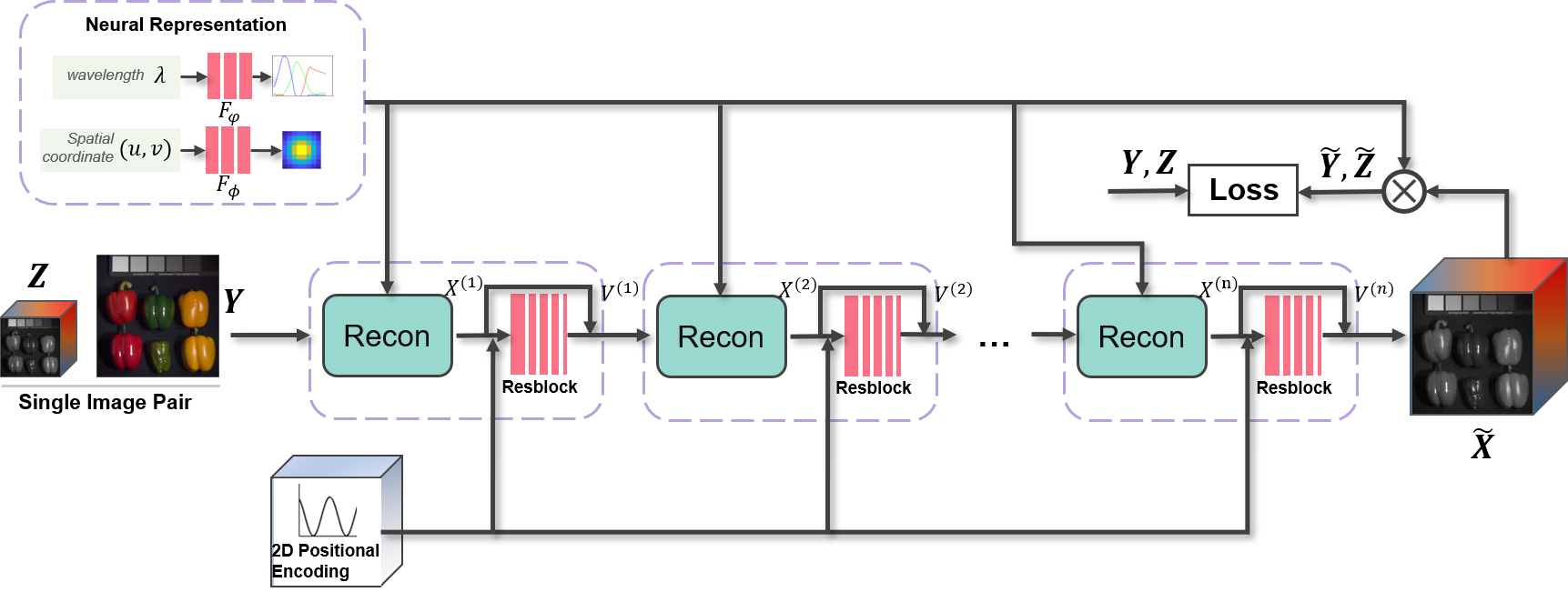

Hyperspectral images (HSIs) provide rich spectral information that has been widely used in numerous computer vision tasks. However, their low spatial resolution often prevents their use in applications such as image segmentation and recognition. Fusing low-resolution HSIs with high-resolution RGB images to reconstruct high-resolution HSIs has attracted great research attention recently. In this paper, we propose an unsupervised blind fusion network that operates on a single HSI and RGB image pair and requires neither known degradation models nor any training data. Our method takes full advantage of an unrolling network and coordinate encoding to provide a state-of the-art HSI reconstruction. It can also estimate the degradation parameters relatively accurately through the neural representation and implicit regularization of the degradation model. The experimental results demonstrate the effectiveness of our method both in simulations and in our real experiments. The proposed method outperforms other state-of-the-art nonblind and blind fusion methods on two popular HSI datasets. Our related code is available at GitHub, dataset is at Google Drive and paper at PDF.

The framework is an unrolling network based on physical model:

The dataset:

The fusion results on real scenario: